Introduction

In recent years, podcasts have become a dominant form of media, offering entertainment, education, and news to millions of listeners worldwide. However, the rapid growth of this medium has also led to concerns about misinformation and harmful content. One alarming trend is the emergence of fake podcasts promoting opioids—raising red flags among lawmakers and public health advocates.

Now, a key U.S. senator is demanding answers from Spotify, one of the largest podcast platforms, over its role in hosting and potentially profiting from deceptive opioid-related content. This scrutiny highlights broader concerns about tech platforms’ responsibility in curbing harmful misinformation, particularly when it comes to public health crises like the opioid epidemic.

The Rise of Fake Opioid Podcasts

Reports have surfaced about podcasts that appear to be legitimate health discussions but are actually stealth advertisements for prescription opioids or illegal drugs. These podcasts often mimic medical advice shows, featuring supposed “doctors” or “patients” who endorse specific medications or online pharmacies.

Some of these deceptive podcasts have been linked to rogue pharmaceutical networks or illegal drug markets. They exploit Spotify’s open podcast submission system, which allows virtually anyone to upload content with minimal vetting. While Spotify has policies against harmful misinformation, enforcement appears inconsistent, allowing some opioid-peddling shows to slip through the cracks.

Senator’s Investigation and Demand for Accountability

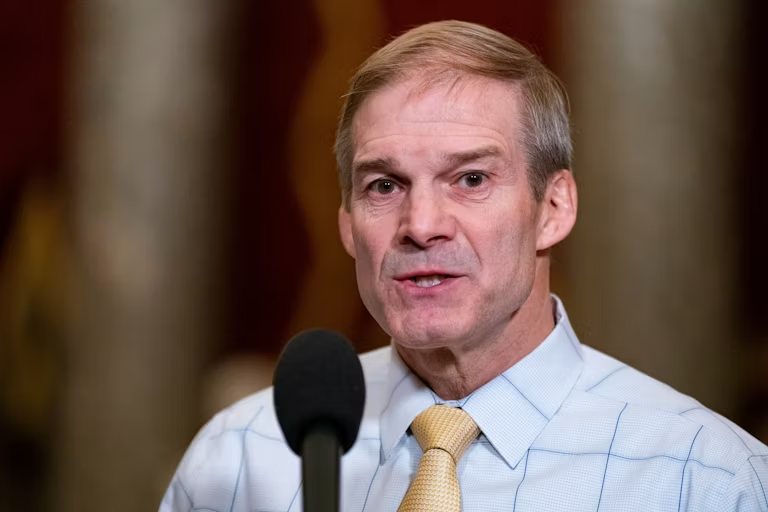

Senator [Senator’s Name], a prominent voice on tech regulation and public health, has sent a formal letter to Spotify CEO Daniel Ek, pressing the company on how these fraudulent podcasts were allowed on the platform. The senator’s office has identified multiple episodes and channels that allegedly promoted opioids under the guise of medical advice.

Key questions raised in the letter include:

-

Content Moderation Policies – What steps does Spotify take to detect and remove podcasts promoting illegal or harmful substances?

-

Advertising and Monetization – Were any of these opioid-related podcasts monetized through Spotify’s ad network, thereby generating revenue for the company?

-

Algorithmic Amplification – Did Spotify’s recommendation algorithms inadvertently promote these podcasts to vulnerable listeners?

-

Cooperation with Authorities – Has Spotify reported these cases to law enforcement or health agencies?

The senator has given Spotify a deadline to respond, warning that failure to address these concerns could lead to regulatory action.

Spotify’s Response and Past Controversies

Spotify has faced criticism before over harmful content. In early 2022, the company came under fire for hosting Joe Rogan’s podcast, which spread COVID-19 vaccine misinformation. After backlash, Spotify introduced content advisory labels but stopped short of outright bans on controversial figures.

In response to the opioid podcast allegations, a Spotify spokesperson stated:

“We take the issue of harmful content seriously and are continuously improving our detection systems. We prohibit content that promotes illegal substances and will remove any violating material upon review.”

However, critics argue that reactive moderation—removing content only after it’s reported—is insufficient. They urge Spotify to implement stricter pre-upload checks, particularly for health-related content.

The Broader Implications for Big Tech

This case is part of a larger debate about how tech platforms handle harmful content. Social media companies like Facebook and YouTube have long struggled with drug-related content, with some facing lawsuits for facilitating illegal drug sales.

Podcasts present a unique challenge because they are often long-form and conversational, making it harder for automated systems to detect harmful claims. Additionally, unlike written posts, spoken misinformation in podcasts may evade keyword-based detection.

If regulators determine that Spotify inadequately policed opioid-related podcasts, it could set a precedent for stricter oversight of audio platforms. Potential measures might include:

-

Mandatory disclosure of sponsors – Requiring podcasters to reveal if they are being paid to promote products.

-

Stronger AI moderation – Developing better speech recognition tools to flag drug-related misinformation.

-

Collaboration with health agencies – Partnering with organizations like the FDA to identify and remove dangerous content faster.

Conclusion

As podcasts grow in influence, so does the risk of them being weaponized to spread harmful misinformation. The senator’s push for accountability from Spotify underscores the urgent need for tech companies to take a more proactive role in content moderation—especially when public health is at stake.

If Spotify and other platforms fail to act, government intervention may become inevitable. For now, all eyes are on how the company responds—and whether this investigation will lead to meaningful changes in how Big Tech handles dangerous content.

Final Thoughts

The opioid crisis has already claimed countless lives, and deceptive podcasts only exacerbate the problem. Platforms like Spotify must prioritize user safety over unchecked growth. The outcome of this scrutiny could shape not just the future of podcasting, but the broader battle against online misinformation.